This week was our mid-point presentation – our first chance to get feedback from our external partners on our work thus far. To condense our work from the past two weeks into a presentable form, we presented two prototypes: BlackBox and the Accident Design Bureau.

BlackBox

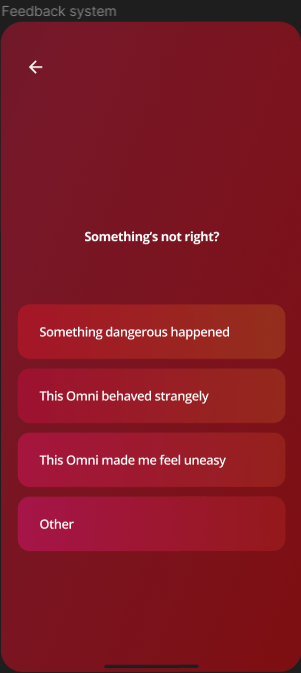

BlackBox is an app that includes users in the action of sharing data. Using the concept of a black box in car insurance, users transmit data about the self-driving buses they ride on in exchange for receiving data about the manufacturer. We used this to address the risk of lack of accountability – ensuring that the manufacturers need to ensure safety by letting passengers monitor them directly.

Accident Design Bureau

The ADB are a collective of citizens that stage minor accidents in order to road-test self-driving vehicles. They do things such as rolling empty prams into traffic, faking road signs such as the ones seen below, and penetration testing the software on buses to highlight where the manufacturers’ standards are insufficient.

This was to address the risk of lack of awareness – making sure that people stay alert to the risks inherent in the technology. This got the strongest reaction from our audience, although the reaction was not always positive – this is likely as we didn’t have time within our presentation to address the point of the organisation as thoroughly as we would like, and so it came across as disruption without cause.

To try and cohere our presentation and ground it in a specific scenario, we played a video on the screen filmed from the top deck of a bus, and moved our research evidence into a pamphlet that we handed out to the attendees. The scenario, more specifically, was set in 2045, where a company called OMNI were introducing privately-owned self-driving buses onto the streets of London.

We received the following feedback:

- The app was too much of a practical solution, and didn’t take advantage of the freedom provided by the speculative setting

- The pamphlet was an excellent way to condense our research and give partners something to take away with them

- The underlying themes of linking the risks of autonomous vehicles to the larger risks of AI was not adequately communicated

- We needed more in-person research to make our outcome more real

Looking back, I’m delighted we managed to do some hands-on making in time for the presentation – it really added to our scenario. I also think our communication strategy – presenting our outcomes directly and placing our research outside of the presentation – worked very successfully. For our final outcome, it’s important that we communicate our underlying themes more explicitly, no matter our presentation strategy.